On July 24th, 2020, I sat and passed the Certified Kubernetes Administrator exam in the first attempt.

Motivation:

I started looking into Kubernetes platform seriously from last year in October when I attended a two days Kubernetes Bootcamp in Paris, the training gave me a basic understanding but there were a lot of missing pieces which lead me to set up a small Kubernetes cluster on my laptop to get more hands-on and exposure on the platform. The good thing about Kubernetes home lab setup is you don’t need fancy home lab gears. I was able to set up a 5 nodes Kubernetes cluster with Ubuntu nodes using VMware Workstation on my laptop with 4 x 2.8 GHz CPU and 32 GB of RAM without much trouble.

Another reason for learning Kubernetes was to align with VMware’s strategy towards Kubernetes. With the recent VMware acquisitions (Heptio, Bitnami, Pivotal, and Octarine) and the way we rearchitected the vSphere (Project Pacific) to deeply embed and integrate Kubernetes, we believe Kubernetes will prove to be the cloud normalization layer of the future.

Preparation:

I started with Kubernetes Fundamentals (LFS 258) course from Linux Academy.

https://training.linuxfoundation.org/training/kubernetes-fundamentals/

went through the course once at a high level and enrolled with “Mumshad Mannambeth” Certified Kubernetes Administrator course on “Kodekloud.com”

https://kodekloud.com/p/certified-kubernetes-administrator-with-practice-tests

Mumshad course is like a breeze of fresh air, very well explained and articulated with practice labs, I followed Mumshad CKA course thoroughly a couple of times along with Kubernetes.io documentation for my preparation. For anyone looking to prepare CKA, I would highly recommend this course.

Exam Curriculum:

The CKA Certification exam includes these general domains, concepts you will be tested against, and their weights on the exam:

Application Lifecycle Management – 8%

- Understand deployments and how to perform rolling update and rollbacks

- Know various ways to configure applications

- Know how to scale applications

- Understand the primitives necessary to create a self-healing application

Installation, Configuration & Validation – 12%

- Design a Kubernetes Cluster

- Install Kubernetes Masters and Nodes

- Configure secure cluster communication

- Configure a highly-available Kubernetes cluster

- Know where to get the Kubernetes release binaries

- Provision underlying infrastructure to deploy a Kubernetes cluster

- Choose a network solution

- Choose your Kubernetes infrastructure configuration

- Run end-to-end tests on your cluster

- Analyze end-to-end test results

- Run Node end-to-end Tests

- Install and use kubeadm to install, configure, and manage Kubernetes clusters

Core Concepts – 19%

- Understand the Kubernetes API primitive

- Understand the Kubernetes cluster architecture

- Understand Services and other network primitives

Networking – 11%

- Understand the networking configuration on the cluster nodes

- Understand Pod networking concepts

- Understand Service Networking

- Deploy and configure network load balancer

- Know how to use Ingress rules

- Know how to configure and use the cluster DNS

- Understand CNI

Scheduling – 5%

- Use label selectors to schedule Pods

- Understand the role of DaemonSets

- Understand how resource limits can affect Pod scheduling

- Understand how to run multiple schedulers and how to configure Pods to use them

- Manually schedule a pod without a scheduler

- Display scheduler events

Security – 12%

- Know how to configure authentication and authorization

- Understand Kubernetes security primitives

- Know how to configure network policies

- Create and manage TLS certificates for cluster components

- Work with images securely

- Define security contexts

- Secure persistent key-value store

Cluster Maintenance – 11%

- Understand Kubernetes cluster upgrade process

- Facilitate operating system upgrades

- Implement backup and restore methodologies

Logging / Monitoring – 5%

- Understand how to monitor all cluster components

- Understand how to monitor applications

- Manage cluster component logs

- Manage application logs

Storage – 7%

- Understand persistent volumes and know how to create them

- Understand access modes for volumes

- Understand persistent volume claims primitive

- Understand Kubernetes storage objects

- Know how to configure applications with persistent storage

Troubleshooting – 10%

- Troubleshoot application failure

- Troubleshoot control plane failure

- Troubleshoot worker node failure

- Troubleshoot networking

Exam Experience

- I had a bit of fun with the exam, the first time I scheduled in the second week of July 2020, the proctor was not able to start the screen share due to some technical reason. Later I found out it was the firewall issue; I was using my employer laptop and due to group policies configuration, I was not able to disable the firewall. It’s a handy little tip, try to use a personal laptop if possible or make sure there are no firewall rules blocking remote connections.

- While “Kubernetes the hard way is an awesome resource” from “Kelsey Hightower” to get a good understanding on Kubernetes bootstrapping process and understand how various Kubernetes control plane components configured and interact with each other which, Make sure to practice and make yourself comfortable with Kubernetes control plane installation and upgrades using KUBEADM from exam perspective.

- I got 24 questions in total to solve in 180 minutes, which is 7.5 minutes per question. Note that Not all questions carry the same marks, it would be a good strategy to knock down questions that are tricky, lengthy, and carry more marks first before taking on simple ones. I took a rather simple approach to go with the questions as they come, however, I did feel that it would have been better to solve complex questions with multiple tasks in starting when the energy and concentration levels are relatively high.

- I have read a couple of threads and blogs online where someone was mentioning that some topics can be left as they don’t come in the exam. Make sure to cover all the topics mentioned in the exam curriculum, I was tested almost on all the concepts mention in the exam blueprint.

- Copy-paste works well within the exam console and from outside without any issues For Mac: ⌘+C to copy and ⌘+V to paste For Windows: Ctrl+Insert to copy and Shift+Insert to paste.

- For Static pod questions, to use the Notepad (on the top menu under ‘Exam Controls’) to write the YAML file and then SSH into the respective node and paste the manifest file in the right directory.

- The first thing you should do before attempting the first question is to setup autocomplete permanently to your bash shell. This will save a lot of time while typing commands:

source <(kubectl completion bash)

echo “source <(kubectl completion bash)” >> ~/.bashrc

- Get yourself familiar with the systemctl to manage systemd services, it will help you with the troubleshooting question on worker node failure scenarios.

- The passing percentage is 74%, which I believe is on a higher side for an exam which is purely hands-on with no multiple-choice questions. It’s very critical to attempt all questions since a lot of questions have multiple tasks, you will get partial marks for the completed tasks. Leaving a question unattempted would be like shooting yourself in the foot.

- Although you are allowed to search/copy-paste from Kubernetes.io portal during the exam, you don’t want to waste time scrolling Kubernetes documentation trying to find information. Make yourself familiar with the Kubernetes .io portal, it will help to navigate and find the right information/command/yaml template without wasting time.

- Make yourself comfortable with the kubectl commands, so that you don’t need to search Kubernetes documentation for every question. Use “—dry-run” with “-o yaml” command parameter with kubectl to create YAML definitions that can redirect to a file, which you can modify later to create the required resource.

- In terms of difficulty level, I would rate CKA equal or slightly tougher then VMware VCAP deploy exam because of the fact that you are under constant time pressure.

- The exam is based on Kubernetes v1.18 consisting of 6 Kubernetes clusters. context to use for a particular question will be provided in the exam console.

- CKA exam with Kubernetes 1.19 will be available to schedule form 1st September 2020. I don’t expect a lot of changes in the exam pattern and number of questions but you never know. I case you are confident and prepared, I would recommend giving it a shot now.

Important commands

Below are some commands which will be handy during the exam. I am not covering all the commands in detail below is just an example.

POD

Create an alpine Pod

$ kubectl run –generator=run-pod/v1 alpine –image=alpine

Generate the POD Manifest YAML file to run an Nginx pod (-o yaml) with –dry-run option to generate a manifest file.

$ kubectl run –generator=run-pod/v1 nginx –image=nginx –dry-run -o yaml

Deployment

Create a deployment

$ kubectl create deployment –image=nginx nginx

Generate Deployment YAML file

$ kubectl create deployment –image=nginx nginx –dry-run -o yaml

Generate Deployment YAML file (-o yaml) with 3 Replicas (–replicas=3)

$ kubectl create deployment nginx –image=nginx

$ kubectl scale deployment nginx –-replicas=3

note:

kubectl create deployment does not have a –replicas option. Create the deployment and then scale it using the kubectl scale command.

Service

Create a Service named httpd-service of type ClusterIP to expose pod httpd on port 80

$ kubectl expose pod httpd –port=80 –name httpd-service –dry-run -o yaml

This will use pod’s labels as selectors

Other option:

$ kubectl create service clusterip httpd –tcp=80:80 –dry-run -o yaml

This command will assume selectors as app=httpd. You cannot pass in selectors as an option. it does not work if the pod has a different label set. Therefore, generate the file and modify the selectors before creating the service.

Create a Service named nginx of type NodePort to expose pod nginx’s port 80 on port 30082 on the nodes:

$ kubectl expose pod nginx –port=80 –name nginx-service –dry-run -o yaml

Above commands automatically use the pod’s labels as selectors, however, you cannot specify the node port. Generate the definition file using –dry-run & -o yaml and add the node port manually before creating the service with the pod.

Or

$ kubectl create service nodeport nginx –tcp=80:80 –node-port=30086 –dry-run -o yaml

This command will not use the pods’ labels as selectors.

As I mentioned I have not covered other important topics from exam perspective ie: PV, PVC, Networking, Network Policies, Ingress, RBAC, Roles & Role Bindings, Secrets, Config Maps, Commands & Arguments, rolling updates, installation and upgrades using KUBEADM, application, control plane, and node failure troubleshooting scenarios, Static Pods, node affinity, taint and tolerations, manual scheduling, etc.

In case you are planning to go for CKA, all the best and go for it. i hope you find the blog helpful.

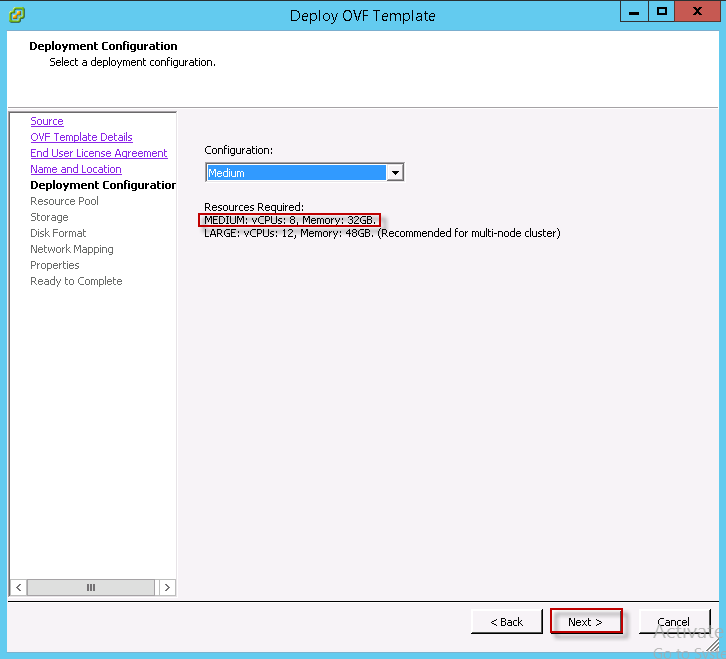

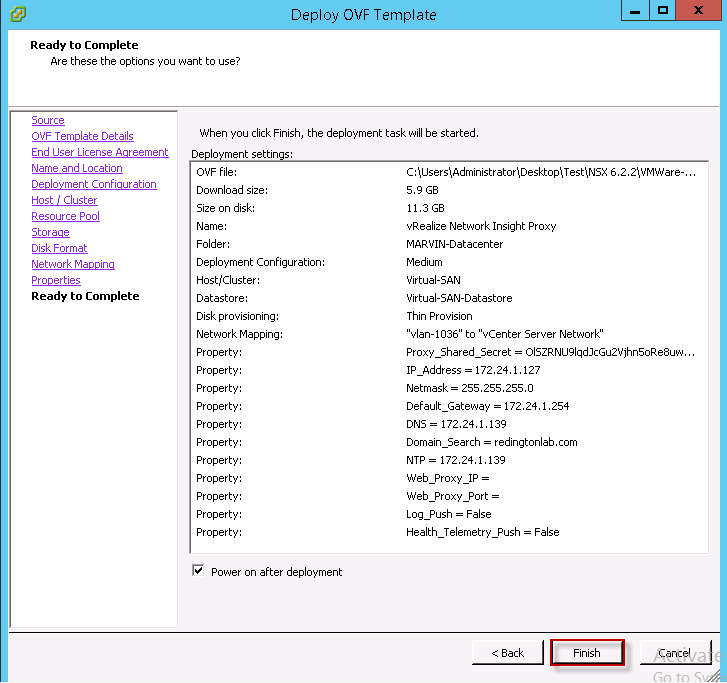

For my environment, I am going with the medium configuration.

For my environment, I am going with the medium configuration.